Nick Suntzeff's data reduction notes from when ANDICAM was used on the YALO-1m

These are notes that I write to myself when I reduce data. They may be of use when you are reducing data taken with CTIO telescopes.

The following are some brief notes on observing with the YALO IR channel, including standards and color terms. These are my experiences in taking and reducing the YALO IR data. All programs and scripts referred to in these notes can be obtained from my ftp area in the file yalo.tar.gz.

Rockwell 1024^2 HgCdTe "Hawaii" device

0.222"/pix for 3.4x3.4 arcmin

gain = 6.5 +/- 0.4 e-/ADU (measured Feb 2000)

ron = 13.4 +/- 1.0 e- (measured Feb 2000)

Numerical bias of 400DN is added to the data to make the output positive.

Dark is < 0.1DN/s

Minimum integration time is 4s. You can request 1-3s, but you will get 4s.

According to Darren Depoy, the upper value of DN is about 10,000 (<1% non-linear). I generally stick to <8000 to be safe.

Measured counting rates over 20 nights from Nov99-Mar00:

(J,H,K)=10 gives (6750,7200,4400) ADU/s in 10" diameter aperture (the size used by Persson, et al 1998)

| J_sky = | 2.7 +/- 0.6 | (sigma) | ADU/s/pix | 15.2 mag/arcsec |

| H_sky = | 12.8 +/- 2.7 | 13.6 mag/arcsec | ||

| K_sky = | 29.8 +/- 6.1 | 12.1 mag/arcsec |

J=H=7.5 in 4s is near 10000ADU peak.

K=7.5 in 4s is near 7500ADU peak. Short exposures in K are often diffraction limited.

CCD and IR orientation:

| E | |

| |----------|---------| | |

| | | | |

| | |N | |

| | | | |

| |----------|---------| |

CCD left amp is bad.

Columns past about 600 are affected by vignetting changes when the internal dither is used.

CCD to IR centers.

(1510,1030) on the CCD maps to to (512,512) on the IR ( 23 Mar 2000).

The YALO IR channel allows an internal dither. The dither is specified in dither units where 1 unit = 0.5 arcseconds. I use dithers of 30-60 units for my early observations of supernovae.

The 7 point dither with a factor of 60 will move an object in pixels as follows:

| 0 | 0 |

| -147 | 43 |

| 113 | -178 |

| 31 | 132 |

| 145 | -43 |

| -116 | 176 |

| -33 | -135 |

These offsets scale well with dither units. Thus for a dither of 30, just divide these numbers by 2.

I have an IRAF task called "dtilt" which will plot the dither positions on an IR image.

The dithering is very convenient but there are two problems.

1. The detector is badly vignetted for x>650 for dither positions other than the first one.

This is a serious problem and implies that you should never be using the detector area where x>650.

2. The fringing pattern in HK is a function of dither position.

If you want to go deep (H,K>15) the fringing will seriously affect your photometry.

I recommend not using the dither except for bright isolated objects. For my supernova programs, I have the telescope operator manually dither (by moving the guide star) the telescope. If you do this, please note the following:

The guider camera at the 1m is not terrible sensitive. If you are working away from the Galactic plane, there may be few or no guide stars. If there is a suitable guide star in the field, instruct the observer to dither manually. Note that the guider field has 0.72 arcsec/per unit with the field as:

| S | |||||||

| | | ------------------------------ | | | ->y=033 | ||||

| | | | | ||||||

| | | 7 | 8 | 9 | | | |||

| | | | | ||||||

| y| | 6 | 1 | 2 | | | |||

| | | |W | ||||||

| | | 5 | 4 | 3 | | | |||

| | | | | ||||||

| | | ------------------------------ | | | ->y=234 | ||||

| | | x | | | |||||

| v | v | ||||||

| x=014 x=233 | |||||||

I instruct the observer to dither as follows:

Start with the object at (300,512) for the SN on the IR detector. Let us assume there is a guide star at guider position (x,y). The nine exposures are taken at the following positions (shown above in the diagram).

1. original position, guider at (x,y), SN at (300,512) on IR detector

2. telescope 25"E, guider at (x+35,y)

3. 25"S, guider at (x+35,y+35)

4. 25"W, guider at (x,y+35)

5. 25"W, guider at (x-35,y+35)

6. 25"N, guider at (x-35,y)

7. 25"N, guider at (x-35,y-35)

8. 25"E, guider at (x,y-35)

9. 25"E, guider at (x+35,y-35)

The dome on and off are with ncoadds=10. The program findgain assumes that there are no coadds. If you use the IRAF program "findgain", you must divide the calculated gain by (ncoadds). I used the script "fgain" to calculate the gain.

Standards, on the CIT system, were taken from:

Elias et al (1982). AJ, 87, 1029 (CIT system)

Elias et al (1983). AJ, 88, 1027 (CIT system)

Persson et al (1998). AJ, 116, 2475

Extinction discussion: Frogel (1998). PASP, 110, 200.

Note that the CIT standards are not quite the same as the Persson standards. The CIT system used a silicon window and the J bandpass, while having the same *filter* function, actually has a redder effective wavelength. The effect is that the CIT system is bluer than the Persson system for red stars.

Persson recommends transforming the CIT system to the LCO system via the expressions given in Table 7. I have transformed all CIT red stars using the Persson transformation for this photometry. The photometry library is given in "ir.lib" in DAOPHOT format.

If you want to observe standards, use 4s for the Elias standards and 10s for the Persson standards. A dither of 5 should give you excellent data.

The form of the transformation is :

j_nat= J + a0 + a1*(J-K) + a2*X + a3*T

h_nat= H + b0 + b1*(J-K) + b2*X + b3*T

h_nat= H + b0 + b1*(H-K) + b2*X + b3*T

k_nat= K + c0 + c1*(J-K) + c2*X + c3*T

j_nat=aperture mag corrected to 10"

J,J-K = library values of photometry

X=airmass (solved as [X-1])

T=UT times

The magnitude is measured relative to a zeropoint m=25 for a total detection of 1ADU/s in the full aperture.

In all cases there was no T dependence and I set the a3b3c3 terms to zero.

| mean | m.e. | red chi-sq | |||||

| A0 = | 5.4095 | 0.0062 | << | 5.3 | 5 | 1.03 | |

| A1 = | -0.0278 | 0.0032 | << | 4.4 | 5 | 0.94 | |

| A2 = | 0.0286 | 0.0044 | << | 3.9 | 5 | 0.89 | |

| B0 = | 5.3404 | 0.0062 | << | 5.9 | 5 | 1.09 | |

| B1 = | 0.0103 | 0.0032 | << | 5.0 | 5 | 1.00 | J-K |

| B1 = | 0.0217 | 0.0026 | << | 2.2 | 2 | 1.06 | H-K |

| B2 = | 0.0269 | 0.0045 | << | 2.0 | 5 | 0.63 | |

| C0 = | 5.8897 | 0.0062 | << | 3.9 | 5 | 0.89 | |

| C1 = | -0.0027 | 0.0031 | << | 5.3 | 5 | 1.03 | |

| C2 = | 0.0705 | 0.0034 | << | 9.0 | 5 | 1.34 | |

The color terms are

[J,J-K] = -0.028 +/- 0.005

[H,J-K] = 0.010 +/- 0.005

[H,H-K] = 0.022 +/- 0.005

[K,J-K] = -0.003 +/- 0.005

Not surprisingly, the IR color terms are close to 0.

The J extinction is very weird here. The typical extinctions should be

X(JHK)=(0.10,0.04,0.08)

but we measure

X(JHK)=(0.03,0.03,0.07)

I have no explanation for this. The extinctions are quite well determined.

The averaged observed values for the standards across all 6 nights is:

| 3 INDICES | chi | K | errr | J-K | err | H-K | err | nJ | nH | nK |

| g77-31 | 1.000 | 7.8566 | 0.0378 | 0.9258 | 0.0571 | 0.3160 | 0.0430 | 1 | 1 | 1 |

| hd22686 | 1.000 | 7.1299 | 0.0388 | 0.0311 | 0.0577 | 0.0483 | 0.0438 | 1 | 1 | 1 |

| hd38921 | 1.397 | 7.5360 | 0.0009 | 0.0347 | 0.0015 | 0.0144 | 0.0022 | 40 | 36 | 43 |

| hd75223 | 1.453 | 7.2820 | 0.0011 | 0.0416 | 0.0020 | 0.0161 | 0.0031 | 31 | 23 | 38 |

| gl347a | 1.258 | 7.6308 | 0.0014 | 0.8028 | 0.0020 | 0.2339 | 0.0016 | 36 | 35 | 35 |

| hd106965 | 3.073 | 7.3117 | 0.0011 | 0.0611 | 0.0027 | 0.0183 | 0.0038 | 30 | 24 | 35 |

| p9118 | 0.696 | 11.2698 | 0.0025 | 0.4676 | 0.0052 | 0.1002 | 0.0032 | 11 | 13 | 13 |

| p9135x | 1.140 | 12.0712 | 0.0070 | 0.3449 | 0.0087 | 0.0463 | 0.0076 | 19 | 20 | 20 |

| p9149 | 1.076 | 11.8694 | 0.0078 | 0.3470 | 0.0082 | 0.0468 | 0.0081 | 21 | 21 | 21 |

| lhs191 | 0.781 | 10.6784 | 0.0036 | 0.9417 | 0.0044 | 0.3916 | 0.0039 | 19 | 21 | 21 |

| iras537w | 1.135 | 10.0583 | 0.0043 | 2.8830 | 0.0070 | 0.9662 | 0.0052 | 13 | 13 | 13 |

| lhs2026 | 0.673 | 11.1269 | 0.0029 | 0.9162 | 0.0036 | 0.3612 | 0.0033 | 21 | 21 | 21 |

| cskd-8 | 0.608 | 9.1582 | 0.0018 | 2.6326 | 0.0029 | 0.7861 | 0.0021 | 21 | 21 | 21 |

| cskd-9 | 0.549 | 9.1360 | 0.0016 | 2.2291 | 0.0026 | 0.6301 | 0.0019 | 21 | 21 | 21 |

| iras537sx | 4.484 | 11.0289 | 0.0134 | 2.3007 | 0.0270 | 0.7589 | 0.0229 | 20 | 20 | 21 |

The library values are

| g77-31 | 7.840 | 0.0100 | 0.927 | 0.0100 | 0.329 | 0.0100 | CIT_to_Persson |

| hd22686 | 7.185 | 0.0070 | 0.010 | 0.0070 | 0.005 | 0.0070 | CIT_to_Persson |

| hd38921 | 7.535 | 0.0050 | 0.035 | 0.0050 | 0.015 | 0.0050 | CIT_to_Persson |

| hd75223 | 7.280 | 0.0050 | 0.045 | 0.0050 | 0.015 | 0.0050 | CIT_to_Persson |

| gl347a | 7.630 | 0.0100 | 0.801 | 0.0100 | 0.236 | 0.0100 | CIT_to_Persson |

| hd106965 | 7.315 | 0.0050 | 0.060 | 0.0050 | 0.021 | 0.0050 | CIT_to_Persson |

| p9118 | 11.264 | 0.0160 | 0.459 | 0.0110 | 0.093 | 0.0090 | Persson |

| p9135x | 12.071 | 0.015 | 0.322 | 0.015 | 0.027 | 0.015 |

average of Persson and me |

| p9149 | 11.861 | 0.0050 | 0.352 | 0.0070 | 0.056 | 0.0060 | Persson |

| lhs191 | 10.667 | 0.0200 | 0.954 | 0.0130 | 0.391 | 0.0120 | Persson |

| iras537w | 9.981 | 0.0130 | 2.993 | 0.0090 | 1.051 | 0.0090 | Persson |

| lhs2026 | 11.129 | 0.0070 | 0.937 | 0.0060 | 0.368 | 0.0050 | Persson |

| cskd-8 | 9.151 | 0.0110 | 2.590 | 0.0100 | 0.762 | 0.0100 | Persson |

| cskd-9 | 9.161 | 0.0110 | 2.211 | 0.0100 | 0.627 | 0.0100 | Persson |

| iras537s | 10.972 | 0.0140 | 2.883 | 0.0120 | 1.115 | 0.0090 | Persson |

The observed mags for iras537s were quite different than the values in the Persson table. I did not use it in the solution.

There are large correlated O-C differences here for the red stars.

If you need absolute photometry, you should include a Persson standard before or after your observation (on a photometric night). Make sure the standard is very near in airmass (and preferably near on the sky) to your object. If this is done over a few photometric nights, using the transformations above, you should be able to transform your photometry to an absolute scale. You should observe the Elias standards for 4s and the Persson standards for 15s. A dither of 30units over 5 positions for each color will give a good calibration.

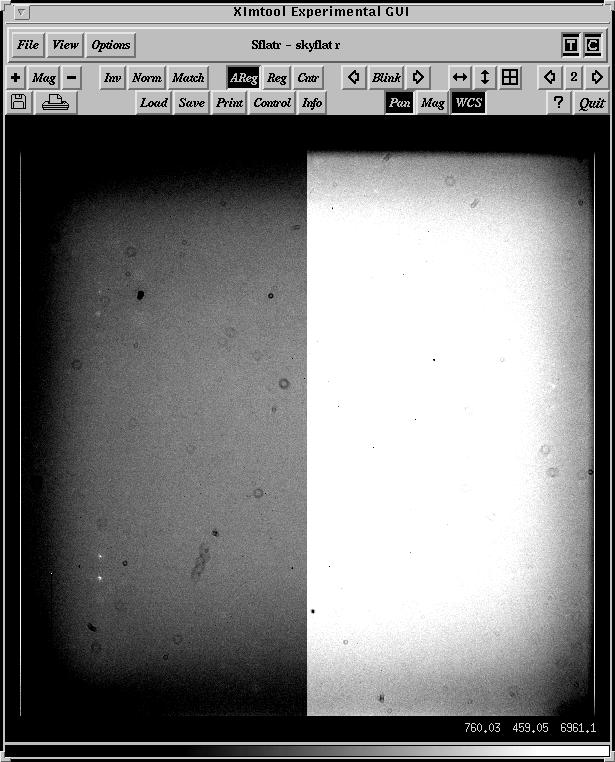

The YALO observer will take dome flats for you. These are 4s exposures, coadds=10 at dither=1 position. They are processed for you as DOME_ON - DOME_OFF and will be placed in your directory for each night of observation. This is the standard flat field. For most observers, this is all the calibration that you need. I however, ask for other data.

1. I ask that along with the JHK (DOME_ON - DOME_OFF), they also copy the averaged DOME_ON and DOME_OFF data to my tape. The DOME_OFF data is a very quick check on the "bias" level of the chip. You can also use these data to calculate the gain and read noise.

2. I plan my observations to have set exposure times, typically 45s, 60s, or 90s. For each set exposure time I use, I ask the night assistant to observe dark frames for me, and to average the darks and write them to the tape (I don't save the individual darks). I ask for 20 darks for each exposure time. These darks are the actual zero structure of the chip. This data is needed if you need to understand the vignetting.

Don't be fooled into believing that the darks are sufficient for the warm pixel removal. Like all IR detectors, this one has lots of warm pixels. Most of the charge injection into these pixels is constant, so the subtraction of the dark can remove it, but a small number of the warm pixels vary during the night. The only way to remove these warm pixels is by the usual sky subtraction.

I am not going to tell you how to reduce your data. But here are some hints on the YALO data.

prelimiaries:

1. The YALO FITS headers have some features which I change.

equinox ==> epoch

observat ==> "ctio"

and move the jd to JD-OLD

I run the script "yalohead" to convert the FITS headers into something

more standard for IRAF.

2. Then run

setjd ir*.fits date="UTDATE" time="UT" exposure="EXPTIME" epoch="EQUINOX"

to set the JD.

3. Set the airmass

setairmass ir*.fits

4. I have written a small task to put a dither parameter in the header.

If you have standards that were taken with dithers, you may want to use this.

task dtilt = home$scripts/dtilt.cl

dtilt:

| image = | "a*.imh" | input images |

| (dither = | 30) | Tilt step: 10,20,30, etc |

| (tilt1 = | 1535) | Tilt position 1 |

| (tilt2 = | 2440) | Tilt position 2 |

| (tilt3 = | 2070) | Tilt position 3 |

| (imglist = | "tmp$tmp.562ga") | |

| (mode = | "ql") |

Check the tilt parameters first as:

hsel *.fits $I,tilt1,tilt2,tilt3 yes

dtilt *.fits

If there are vignetting problems, it is easier to deal with the data if you copy all the J data (ir*.fits, ir*.J_OFF.fits, ir*.flatj.fits) to a directory called "j". Same for H and K. For some reason, ccdred gets annoyed if the data are not in *.imh format. You must copy the *flat*.fits data to *flat*.imh, where these data are the ON-OFF dome data.

hsel ir*.fits $I,irfltid yes | grep "J" - | fields - 1 > inj

hsel ir*.fits $I,irfltid yes | grep "H" - | fields - 1 > inh

hsel ir*.fits $I,irfltid yes | grep "K" - | fields - 1 > ink

Copy the images and rename them something simplier, like a???.imh.

To copy images from *.fits to *.imh, you can use:

task cpimh = /uw50/nick/scripts/cpimh.cl

cpimh ir000323.K_OFF

This task copies the *.fits to *.imh in the user directory.

The IR detector has a numerical bias of 400 units. On top of that, the dark frame at the same exptime as an object frame has warm pixels that are similar to biases. The biases we will use are in order of preference:

1. A dark taken at the same time as the object frame.

2. The DOME_OFF frame

Note the the K DOME_OFF actually has some light on it. You must do a getsky on this image, see what the sky value is, and subtract a constant to bring it to 400.

imar ir000323.K_OFF - 460 ir000323.K_OFF

3. A numerical bias frame with 400. in all the pixels. If you have to make a numerical bias, then:

imcopy ir991121.flatj zero400

imrep zero400 400. lower=INDEF upper=INDEF

hedit zero400 IMAGETYP zero up+

hedit zero400 title "Numerical bias of 400" up+

For the DOME_OFF, dark, or a constant bias of 400 frame, you must declare the image as a ZERO image.

hedit ir000323.H_OFF IMAGETYP zero up+ ver-

0. Copy the flats to *.imh

task cpimh = /uw50/nick/scripts/cpimh.cl

cpimh *.flat?.fits delin+

1. The data called ir991121.flatj etc. are the flats calculated as DOME_ON-DOME_OFF.

YOU MUST EDIT THE HEADER OF YOUR FLAT FRAME TO SHOW THAT THESE FLATS ARE ZERO-CORRECTED. (They are already zero-corrected because they were calculated as DOME_ON-DOME_OFF). IF YOU DON'T DO THIS, THE FLATS WILL BE ZERO-CORRECTED BY CCDPR, AND THIS IS VERY WRONG!

hedit *flat*.imh ZEROCOR "Corrected by DOME_OFF" add+

2. The flats may have 0 value pixels, which will cause the ccdpr to crash.

The low pixels should be replaced by a large number (here I chose saturation) so that in the division, they will be close to 0. You may want to run the flats through imrep as:

imreplace *flat*.imh 10000 lower=INDEF upper=1

Now the ccd reduction:

With the parametes set, you just run:

ccdpr a*.fits nop+

to see what happens and then

ccdpr a*.fits

MAKE SURE THE FLAT DOES NOT GET ZERO SUBTRACTED!

ccdr:

| pixeltype = | "real real") | Output and calculation pixel datatupes |

| (verbose = | yes) | Print log information to the standard output? |

| (logfile = | "logfile") | text log file |

| (plotfile = | "") | Log metacode plot file |

| (backup = | "") | Backup directory or prefix |

| (instrument = | "myiraf$/yalo_ir.dat") | CCD instrument file |

| (ssfile = | "myiraf$/yalo:_ir.sub") | Subset translation file |

| (graphics = | "stdgraph") | Insteractive graphics output |

| (cursor = | "") | Graphics cursor input |

| (version = | "2: October 1987") | |

| (mode = | "ql") | |

| ($nargs = | 0) |

ccdpr:

| images = | "a*.imh" | List od CCD images to correct |

| (output = | "") | List of output CCD images |

| (ccdtype = | "") | CCD image type to correct |

| (max_cache = | 0) | Maximun image caching memory (in Mbytes) |

| (noproc = | no) | List processing steps only?\n |

| (fixpix = | no) | Fix bad CCD lines and columns? |

| (overscan = | no) | Apply overscan strip correction? |

| (trim = | no) | Trim the image? |

| (zerocor = | yes) | Apply zero level correction? |

| (darkcor = | no) | Apply dark count correction? |

| (flatcor = | no) | Apply flat field correction? |

| (illumcor = | no) | Apply illumination correction? |

| (fringecor = | no) | Apply fringe correction? |

| (readcor = | no) | Convert zero level image readout correction? |

| (scancor = | no) | Convert flat fiel image to scan correction?\n |

| (readaxis = | "line") | Read out axis (column|line) |

| (fixfile = | "") | File describing the bad lines and columns |

| (biassec = | "") | Overscan strip image section |

| (trimsec = | "") | Trim data section |

| (zero = | "zero400") | Zero level calibration image |

| (dark = | "") | Dark count calibration image |

| (flat = | "ir*.flat*.imh") | Flat field images |

| (illum = | "") | Illumination correction images |

| (fringe = | "") | Fringe correction images |

| (minreplace = | 1.) | Minimum flat field value |

| (scantype = | "shortscan") | Scan type (shortscan|longscan) |

| (nscan = | 1) | Number of short scan lines\n |

| (interactive = | yes) | Fit overscan interactively? |

| (function = | "legendre") | Fitting function |

| (order = | 4) | Number of polynomial terms of spline pieces |

| (sample = | "*") | Sample points to fit |

| (naverage = | 1) | Number of sample points to combine |

| (niterate = | 3) | Number of rejection iterations |

| (low_reject = | 2.5) | Low sigma rejection factor |

| (high_reject = | 2.5) | High sigma rejection factor |

| (grow = | 0.) | Rejection growing radius |

| (mode = | "ql") |

myiraf$/yalo_ir.dat:

exptime exptime

imagetyp imagetyp

subset IRFLTID

| OBJECT | object | ||

| DARK | zero | ||

| FLAT | flat | ||

| BIAS | zero | ||

| MASK | other |

myiraf$/yalo_ir.sub

| 'H' | H | |

| 'J' | J | |

| 'K' | K |

Reduce the data to [Z] with ccdpr. We will do the flatfields and badpix fixing later after the sky subtraction.

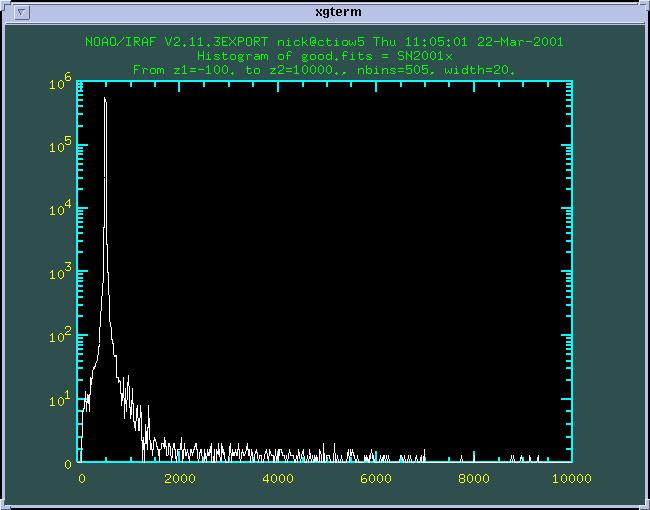

Make a mask image as follows. Here we use the dome flats for the mask. Note that there are very many warm pixels with the detector and about 10% of these change flux during the night. If the warm pixels change flux between the ON and OFF images, they will be flagged as bad pixels here.

The philosophy of the masks is that all pixels in a normalize image that are less than some value like 0.7 are probably bad, and will be marked as a bad pixel.

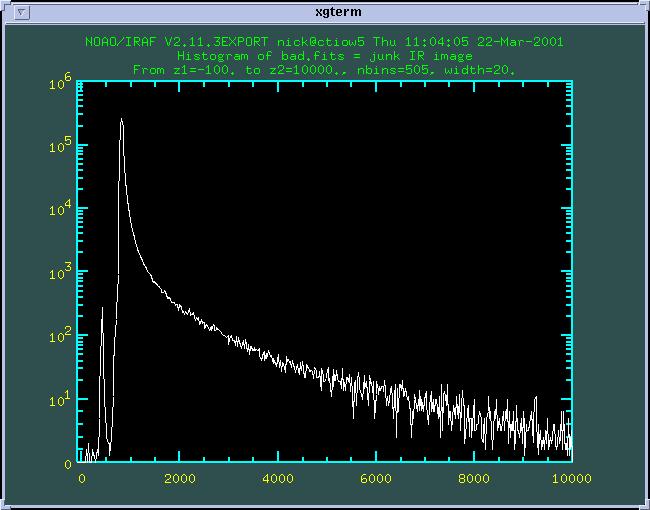

mask1.cl:

# to make the mask, use imhist and look for the limits

# first flatten the flats and remove the edge defects

#

string img

img = "ir000323.flath"

#

fmed(img,"mask", xwin=1, ywin=101, boundary="wrap")

imar(img, "/", "mask", "mask")

imrep mask[*,1:9] 0 lower=INDEF upper=INDEF

imrep mask[*,1021:1024] 0 lower=INDEF upper=INDEF

imrep mask[1:1,*] 0 lower=INDEF upper=INDEF

imrep mask[1021:1024,*] 0 lower=INDEF upper=INDEF

#

# now check the historgram and change the limits if needed.

#

imhist mask z1=0.4 z2=1.4 nbins=100

mask2.cl

#

# good pix are 0, bad are 1 for IRAF mask

# the values 0.65 and 1.25 need to be checked on the histogram

# each time you make the mask.

#

real lll, uuu

lll = 0.8

uuu = 1.17

displ mask 1

imrep("mask", lower=INDEF, upper=lll, val=-1 )

imrep("mask", lower=uuu, upper=INDEF, val=-1)

imrep("mask", lower=lll, upper=uuu, val=0)

imar mask * mask mask

imcopy mask.imh mask.pl

# make DAOPHOT mask where bad pix are 0 and good are 1

imrename mask.imh maskdao

imar maskdao - 1 maskdao

imar maskdao * -1 maskdao

#

displ mask.pl 2 zs- zr- z1=0 z2=1

You can check frames 1,2 to see if the mask looks good.

Make inj,inh,ink files for all the SN data. These will be used to make the sky.

task irsky = home$scripts/irsky.cl

hsel @in1 $I,irfltid yes | grep "J" - | fields - 1 > inj

hsel @in1 $I,irfltid yes | grep "H" - | fields - 1 > inh

hsel @in1 $I,irfltid yes | grep "K" - | fields - 1 > ink

Run irsky:

irsky:

| images = | "@inj" | input images |

| (statsec = | "[10:700,10:1010]") | Stat sec |

| (sigma = | 2.5 | sigma clip fro stats |

| (niter = | 5 | interactions for sigma |

| (irfltid = | "IRFLTID" | keyword for filter |

| (outimage = | "Sky" | Output roor for sky image |

| (nlow = | 0 | number of low pixel to reject |

| (nhigh = | 1 | number of high pixels to reject |

| (combine = | "median" | type of combine function |

| (reject = | "minmax" | type of rejection |

| (imglist1 = | "t1.jnk" | |

| (mode = | "ql" |

irsky f*.imh

You may have to play with the nhigh to reduce the print-through.

This program outputs a file called sub.cl. Edit sub.cl to output s???.imh

imdel tempsky

imar SkyJ * 1.0095662405725 tempsky

imar ir000528.0203 - tempsky s203

imdel tempsky

imar SkyJ * 1.0479313902369 tempsky

imar ir000528.0204 - tempsky s204

etc.

This is now sky subtracted data. All the data should be near 0 sky. You can check this with getsky.

task getsky = home$scripts/getsky.cl

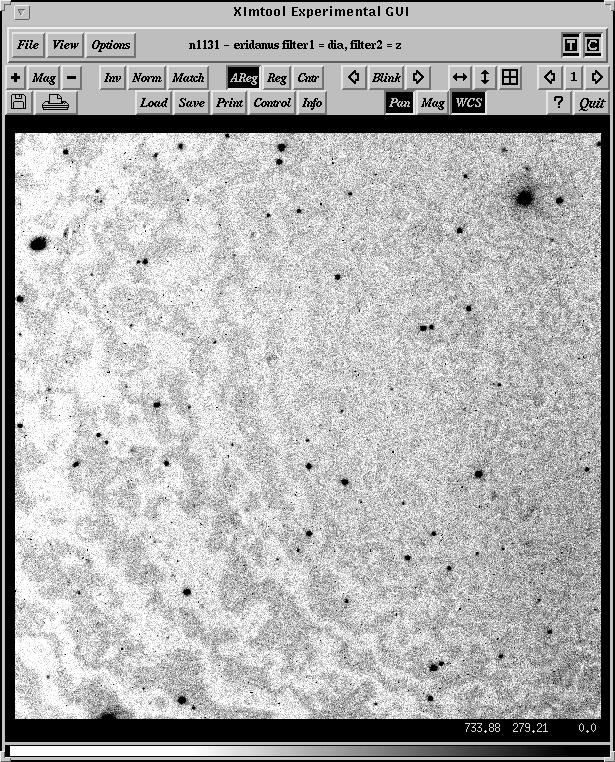

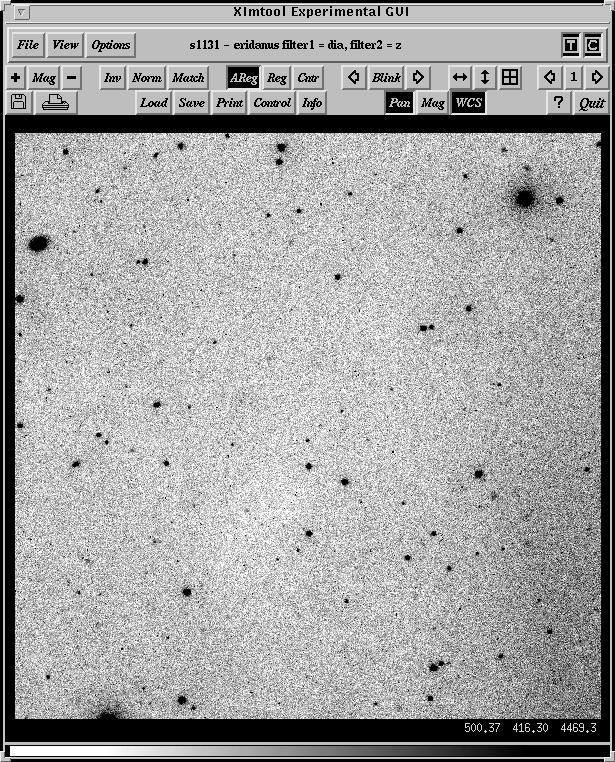

Look at the final subtractions to see if the sky subtracted well, and there is not a large flux "hole" in the image center due to print through of the median combine of the images.

Note on standard stars. For the standard star runs, I looked at the J,H,K separately, and separated out the exposure times. I then formed skies for each exptime, rather than a grand average one. I did not scale the skies; rather I used an offset and a straight median. This is because the sky is a lot less of a problem than warm pixels. The subtraction looked good but some warm pixels did not get subtracted out. One needs a few object exposures sequentially with the telescope moved to get the warm pixels removed using a local median.

First sort the data based on exptime, and make files of images in a given filter and a given exptime, such as inj04,inj15 etc.

hsel @inj $I,exptime yes | sort col=2 > junkj

hsel @inh $I,exptime yes | sort col=2 > junkh

hsel @ink $I,exptime yes | sort col=2 > junkk

imcomb @ink04 SkyK04 scal- rejec=minmax comb=median nlo=0 nhi=1 zero=mode

imcomb @inj04 SkyJ04 scal- rejec=minmax comb=median nlo=0 nhi=1 zero=mode

imcomb @inh04 SkyH04 scal- rejec=minmax comb=median nlo=0 nhi=1 zero=mode

imcomb @ink15 SkyK15 scal- rejec=minmax comb=median nlo=0 nhi=1 zero=mode

imcomb @inj15 SkyJ15 scal- rejec=minmax comb=median nlo=0 nhi=1 zero=mode

imcomb @inh15 SkyH15 scal- rejec=minmax comb=median nlo=0 nhi=1 zero=mode

Then subract the images into s???.imh

hsel a*.imh $I,irfltid,exptime yes > in2

edit in2, etc.

Put a keyword in the header that you have done the skysubtraction:

hedit sxxx.imh SKYSUB "Subtracted SkyJ04" add+ ver-

etc.

ccdpr s???.imh flatcor+

fixpix s???.imh mask=mask.pl

Check to see that the images are now [BZF].

ccdl s*.imh

I leave the final shift and average task to you. I tend to shift and stack only the data in common to all the dithered images. Others will first imbed the image in a large image (say 1500x1500) and then shift and stack. In the final image, some pixels will come from only one frame, others from all the frames. It depends on if you need the area or not.

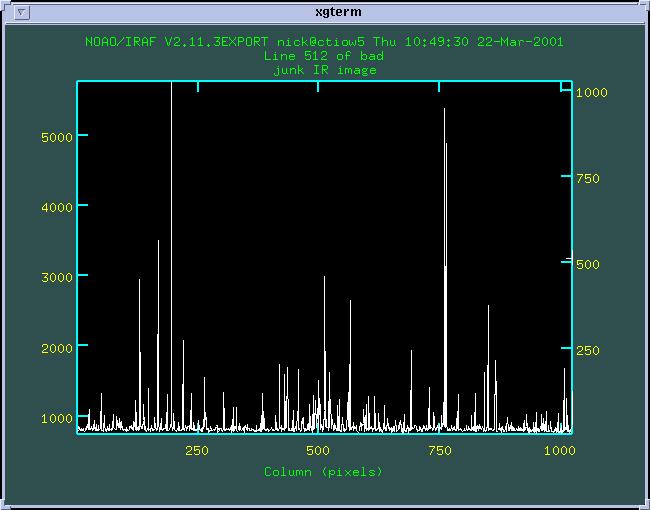

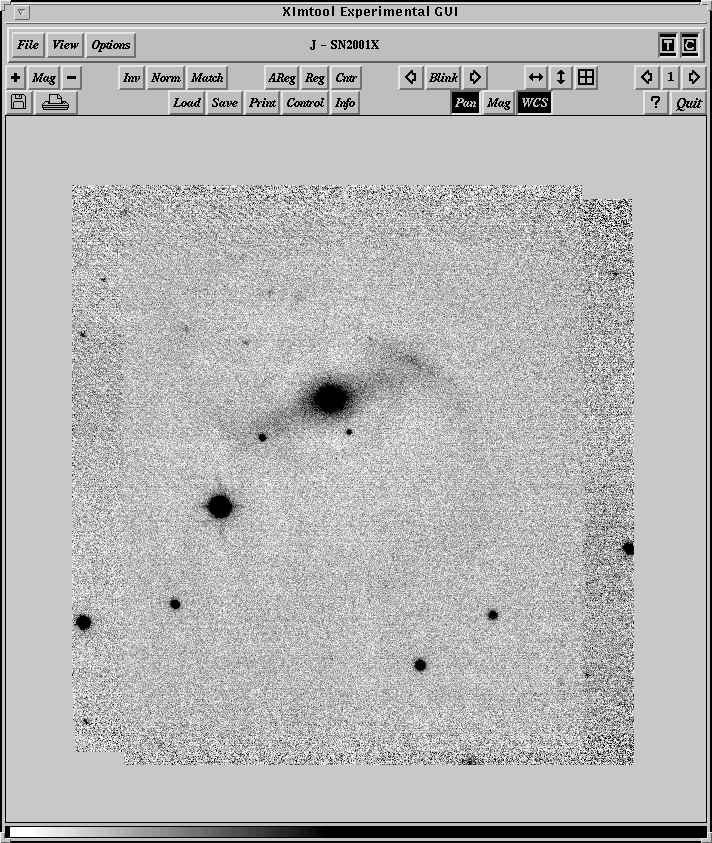

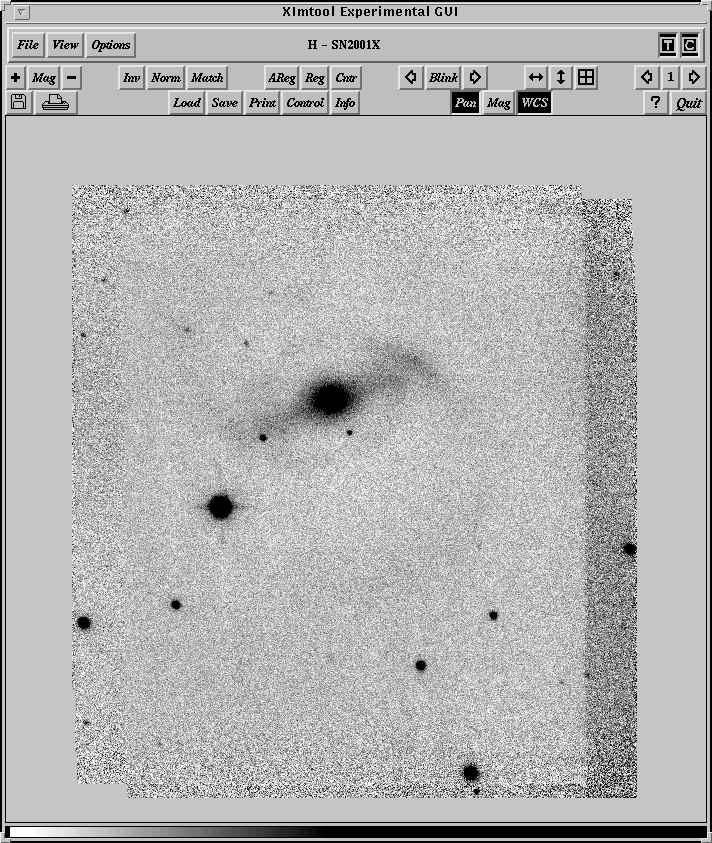

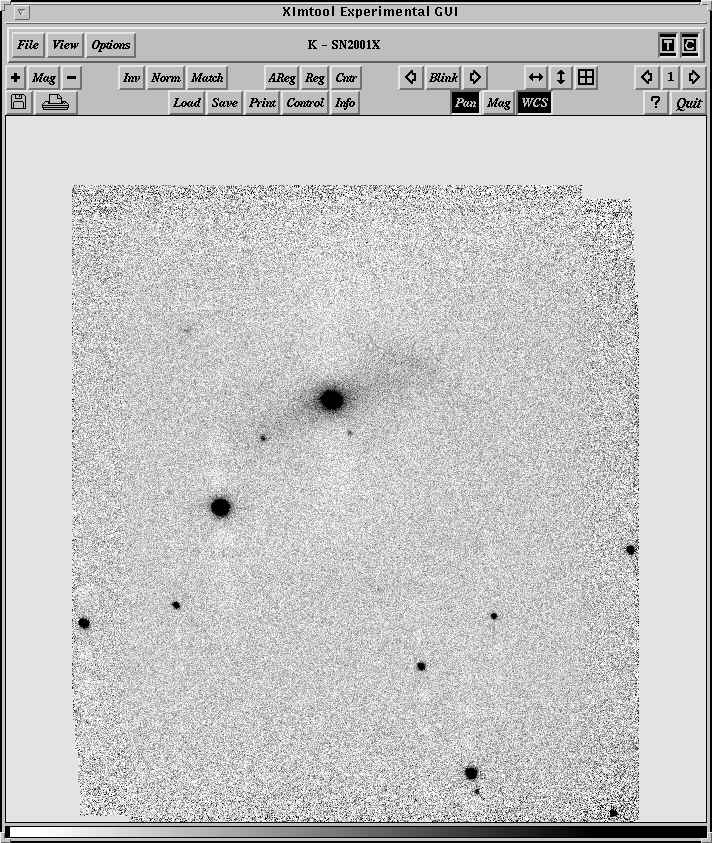

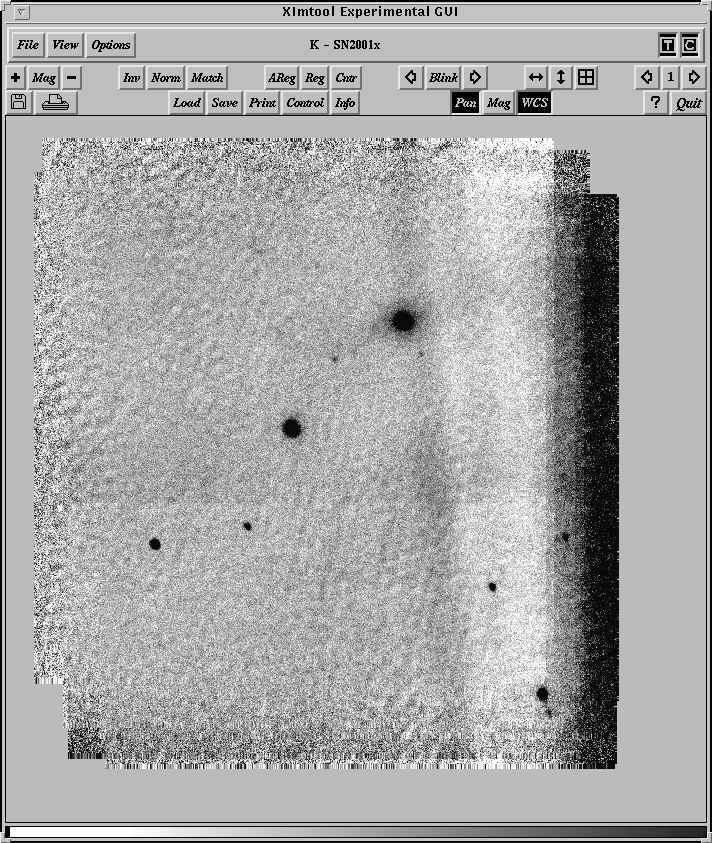

For this run with 2001X, the SN was faint enough that the fringing in K was causing problems. I had the operators run a script (SN01x.pro in my ftp area under yalo) which used a dither of 2 and moved the telescope by 1.0s of time between series. It also took a junk image after each telescope move. The final data for K reduced without fringing, and I will use this technique for all SN in the future.

March 2001. I used H data for the SN and two darks for the calculation. Use findgainir in nickcl for the script.

Gain 6.6e-/ADU

read noise 14.1e-

We will use a directory structure as:

| /uw54/nick/sn/sn01cn | |||||||

| | | |||||||

| | | |||||||

| 20010630 | |||||||

| | | |||||||

| ------------------------------------------------------------------ | |||||||

| | | | | ||||||

| | | | | ||||||

| opt | ir | ||||||

.daophot

# sn99em

setenv i20010630 /uw54/nick/sn/sn01cn/20010630/ir

alias i20010630 "cd $i20010630"

setenv o20010630 /uw54/nick/sn/sn01cn/20010630/opt

alias o20010630 "cd $o20010630"

You can also set them up for IRAF as:

loginuser.cl:

set o20010630 = /uw54/nick/sn/sn01cn/20010630/opt/

set i20010630 = /uw54/nick/sn/sn01cn/20010630/ir/

Copy over some useful files:

Create, or point to the uparm$ directory with the IR data information. Here is my file:

setup:

set stdimage = imt2048

set uparm = /uw50/nick/uparm/yaloir/

noao

ctio

nickcl

imred

ccdred

astutil

digi

apphot

artdata

ccdred.instrument = "myiraf$yalo_ir.dat"

ccdred.ssfile = "myiraf$yalo_ir.sub"

loadit.format = "2048"

loadit.statsec = "700:800,700:800"

keep

cpimh ir*.fits del+

cpimh nick*.fits del+

equinox ==> epoch

observat ==> "ctio"

and move the jd to JD-OLD

I run the script "yalohead" to convert the FITS headers into something more standard for IRAF.

yalohead *.imh

The task now does the setjd and the setairmass. If you need to do it by hand, do this:

setjd *.imh date="UTDATE" time="UT" exposure="EXPTIME" epoch="EQUINOX" setairmass *.imh

Check the tilt parameters first as:

hsel *.imh $I,tilt1,tilt2,tilt3 yes

Now run:

dtilt *.imh

dtilt:

| images = | "a*.imh" | input images |

| (dither = | 40) | Tilt step: 10, 20, 30, etc |

| (tilt1 = | 1320) | Tilt position 1 |

| (tilt2 = | 2225) | Tilt position 2 |

| (tilt3 = | 1820) | Tilt position 3 |

| (imglist = | "tmp$tmp.562ga") | |

| (mode = | "ql") |

Remove the junk images.

hsel *.imh $I,title yes | grep "junk" | fields - -1 > injunk

emacs injunk

ccdl @injunk

Making the biases:

The IR detector has a numerical bias of 400 units. On top of that, the dark frame at the same exptime as an object frame has warm pixels that are similar to biases. The biases we will use are in order of preference:

1. An averaged dark taken at the same time as the object frame. Check to see if the darks look okay. Sometimes the first one is bad.

displ nickdark.0001 1 zs- zr- z1=400 z2=425

displ nickdark.0002 2 zs- zr- z1=400 z2=425

hedit nickdark*.imh imagetyp zero up+ ver-

zerocomb nickdark.????.imh out=irdark45 comb=med rej=minmax nlow=1 nhigh=1

displ irdark45 1 zs- zr- z1=400 z2=500

hedit irdark45 IMAGETYP zero up+ ver-

2. The DOME_OFF frame

Note the the K DOME_OFF actually has some light on it. You must do a getsky on this image, see what the sky value is, and subtract a constant to bring it to 400.

imar ir000323.K_OFF - 460 ir000323.K_OFF

3. A numerical bias frame with 400. in all the pixels. If you have to make a numerical bias, then:

imcopy ir991121.flatj zero400

imrep zero400 400. lower=INDEF upper=INDEF

hedit zero400 IMAGETYP zero up+

hedit zero400 title "Numerical bias of 400" up+

4 IMPORTANT! Whatever bias you are using, you must declare the image as a ZERO image.

hedit irdark45 IMAGETYP zero up+ ver-

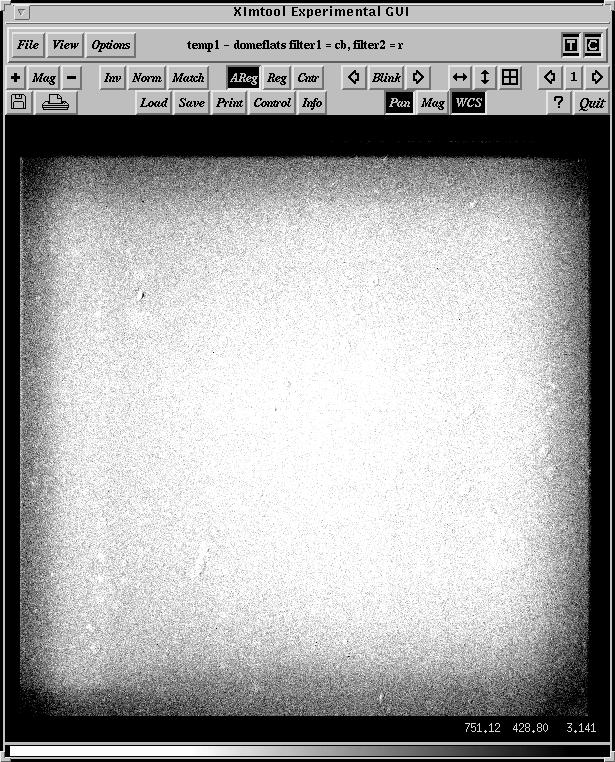

Here we are going to create a flat field for each dither position using the single set of dome images. We will form the flats in the usual manner. We will reduce the data to [ZF] before sky subtraction to remove the vignetting.

1. Form the DOME_ON-DOME_OFF. First of all, rename the data "irflath.000?.imh,irdarkh.000?.imh" to a subdirectory. We need these names.

mkdir old

imren irflath.000?.imh old

imren irdarkh.000?.imh old

Run the following script which will set up the flats correctly for the

2 dither position. The logic is explained below. This script will make the flats, add the correct CCDMEAN value, and replace all 0 values with 20000.

flat.cl:

imar nickjon.0001 - nickjoff.0001 irflatj.0001

imar nickjon.0002 - nickjoff.0002 irflatj.0002

#

imar nickhon.0001 - nickhoff.0001 irflath.0001

imar nickhon.0002 - nickhoff.0002 irflath.0002

#

imar nickkon.0001 - nickkoff.0001 irflatk.0001

imar nickkon.0002 - nickkoff.0002 irflatk.0002

#

hedit irflat?.????.imh DOMEOFF "Dome-off image was subtracted" add+ ver-

hedit irflat?.????.imh ZEROCOR "Corrected by DOME_OFF" add+

hedit irflat?.????.imh IMAGETYP "FLAT" up+ ver-

imreplace irflat?.????.imh 20000 lower=INDEF upper=1

nstat irflat?.000?.imh niter=9 mkmean+ statsec = "25:640,25:1000"

In some cases, the *.0001.imh images were corrupted because the operator did not throw away the first image. You can copy the usual nightly DOME_ON-DOME_OFF data, which are dither=1, into these images.

imdel irflatj.0001,irflath.0001,irflatk.0001

1. The flats are calculated as DOME_ON-DOME_OFF.

YOU MUST EDIT THE HEADER OF YOUR FLAT FRAMES TO SHOW THAT THESE FLATS ARE ZERO-CORRECTED. (They are already zero-corrected because they were calculated as DOME_ON-DOME_OFF). IF YOU DON'T DO THIS, THE FLATS WILL BE ZERO-CORRECTED BY CCDPR, AND THIS IS VERY WRONG!

hedit irflat?.????.imh ZEROCOR "Corrected by DOME_OFF" add+

2. The flats may have 0 value pixels, which will cause the ccdpr to crash.

The low pixels should be replaced by a large number (here I chose saturation) so that in the division, they will be close to 0. You may want to run the flats through imrep as:

imreplace irflat?.????.imh 20000 lower=INDEF upper=1

3. Next is a subtle point. We are going to divide by 2 different flats per filter.

Normally, ccdpr calculates a CCDMEAN parameter for a flat, which has the effect of dividing the flat by CCDMEAN and brining it to an average of 1.0 before applying it to the data. But for vignetting, this is wrong. Consider 2 dither positions, and assume that the dither=2 position shows only 1/2 the counts than dither=1. This could be due to either the flatfield lamp changing, or vignetting. Assume dither=2 has 50% vignetting everywhere. If the flat at dither=1 has 1000ADU, the dither=2 will have 500ADU. The ccdpr program will normalize these two flats to 1.0. The resulting [ZF] data will be wrong for the dither=2 case by 50%.

What we need to do is very carefully to identify a part of the detector where there is no vignetting, and force CCDMEAN to this value. The resulting flats will be okay then. To do this, run nstat with mkmean+:

nstat:

| images = | "irflat?.000?.imh" | Input images |

| (statsec = | "25:640,25:1000") | Stat sec |

| (binwidth = | 0.1) | Bin width of histogram in sigma |

| (iterate = | yes) | Iterate on the statistics? |

| (niter = | 5) | Number of iterations |

| (sigclip = | 2.) | Sigma clip for statistics |

| (mkmean = | no) | Update CCDMEAN parameters? |

| (imglist = | "tmp$tmp.7826f") | |

| (mode = | "ql") |

nstat irflat?.000?.imh niter=9 mkmean+

Do the following to make sure the flats are all [Z] and the bias is

declared [zero] and the flats are declared as flats:

ccdl irflat*.imh,irdark*.imh

irflath.0001.imh[1024,1024][real][flat][H][Z]:Dome H On, Dither=2/40

irflath.0002.imh[1024,1024][real][flat][H][Z]:Dome H On, Dither=2/40

irflatj.0001.imh[1024,1024][real][flat][J][Z]:Dome J On, Dither

irflatj.0002.imh[1024,1024][real][flat][J][Z]:Dome J On, Dither

irflatk.0001.imh[1024,1024][real][flat][K][Z]:Dome K On, Dither=2/40

irflatk.0002.imh[1024,1024][real][flat][K][Z]:Dome K On, Dither=2/40

irdark45.imh[1024,1024][real][zero][DARK]:Dark 45s

3.5 Go ahead and rename the data to something simple:

imren ir011217.0*.imh %ir011217.0%r%*.imh

4. Now we flatten the data with the separate dither flats.

I have written a task called yaloflatir.cl which will form the IRAF script to handle the dither flats. Run it as:

yaloflatir r???.imh

The run

cl < yfir.cl

ccdproc r102.imh zerocor+ zero=irdark45 flatcor+ flat=irflatj.0001

etc.

The data are now [ZF].

ccdr:

| (pixeltype = | "real real" | Output and calculation pixel datatypes |

| (verbose = | yes |

Print log information to the standard output? |

| (logfile = | "logfile" | text log file |

| (plotfile = | "" | Log metacode plot file |

| (backup = | "" | Backup directory or prefix |

| (instrument = | "myiraf$/yalo_ir.dat" | CCD instrument file |

| (ssfile = | "myiraf$/yalo_ir.sub" | Subset translation file |

| (graphics = | "stdgraph" | Interactive graphics output device |

| (cursor = | "" | Graphics cursor input |

| (version = | "2: October 1987" | |

| (mode = | "ql") | |

| ($snargs = | 0) |

ccdpr

| images = | "a*.imh" | List of output CCD images |

| (output = | "") | List of output CCD images |

| (ccdtype = | "") | CCD image type to correct |

| (max_cache = | 0) | Maximum image caching memory (in Mbytes) |

| (noproc = | no) | List processing steps only?\n |

| (fixpix = | no) | Fix bad CCD lines and columns? |

| (overscan = | no) | Apply overscan strip correction? |

| (trim = | no) | Trim the image? |

| (zerocor = | yes) | Apply zero level correction? |

| (darkcor = | no) | Apply dark count correction? |

| (flatcor = | no) | Apply flat field correction? |

| (illumcor = | no) | Apply illumination correction? |

| (fringecor = | no) | Apply fringe correction? |

| (readcor = | no) | Convert zero level image readout correction? |

| (scancor = | no) | Convert flat field image to scan correction?\n |

| (readaxis = | "line") | Read out axis (column|line) |

| (fixfile = | "") | File describing the bad lines and columns |

| (biassec = | "") | Overscan strip image section |

| (trimsec = | "") | Trim data section |

| (zero = | "irdark45") | Zero level calibration image |

| (dark = | "") | Dark count calibration image |

| (flat = | "irflat?.imh") | Flat field images |

| (illum = | "") | Illuminaion correction images |

| (fringe = | "") | Fringe correction images |

| (minreplace = | 1.) | Minimum flat field value |

| (scantype = | "shortscan") | Scan type (shortscan|longscan) |

| (nscan = | 1) | Number of short scan lines\n |

| (interactive = | yes) | Fit overscan interactively? |

| (function = | "legendre") | Fitting function |

| (order = | 4) | Number of polynomial terms or splie pieces |

| (sample = | "*") | Sample points to fit |

| (naverage = | 1) | Number of sample points to combine |

| (niterate = | 3) | Number of rejecction iterations |

| (low_reject = | 2.5) | Low sigma rejection factor |

| (high_reject = | 2.5) | High sigma rejection factor |

| (grow = | 0.) | Rejection growing radius |

| (mode = | "ql") |

myiraf$/yalo_ir.dat:

exptime exptime

imagetyp imagetypimages =(output =(ccdtype =(max_cache =(noproc =(fixpix =(overscan =(trim =(zerocor =(darkcor =(flatcor =(illumcor =(fringecor =(readcor =(scancor =(readaxis =(fixfile =(biassec =(trimsec =(zero =(dark =(flat =(illum =(fringe =(minreplace =(scantype =(nscan =(interactive =(function =(order =(sample =(naverage =(niterate =(low_reject =(high_reject =(grow =(mode =

subset IRFLTID

| OBJECT | object | |

| DARK | zero | |

| FLAT | flat | |

| BIAS | zero | |

| MASK | other |

myiraf$/yalo_ir.sub

| 'H' | H |

| 'J' | J |

| 'K' | K |

Make a mask image as follows. Here we use the dome flats corrected for DOME_OFF for the mask. Note that there are very many warm pixels with the detector and about 10% of these change flux during the night. If the warm pixels change flux between the ON and OFF images, they will be flagged as bad pixels here.

The philosophy of the masks is that all pixels in a normalize image that are less than some value like 0.7 are probably bad, and will be marked as a bad pixel.

mask1.cl:

# to make the mask, use imhist and look for the limits

# first flatten the flats and remove the edge defects

#

real midpt

string img

img = "irflath.0002"

#

imdel("temp*.imh,mask*.imh,mask.pl", >>& "dev$null")

imstat(img//"[50:600:10,50:1000:10]",fields="midpt",form-) | scan(midpt)

print(img," ",midpt)

imar(img,"/",midpt,"temp1")

imtrans("temp1","temp2")

fmed("temp2","temp3", xwin=201, ywin=1, boundary="wrap",zlo=0.4,zhi=2.0)

imtrans("temp3","temp4")

imar("temp1", "/", "temp4", "mask")

imdel("temp*.imh", >>& "dev$null")

imrep mask.imh[*,1:10] 0 lower=INDEF upper=INDEF

imrep mask.imh[*,1020:1024] 0 lower=INDEF upper=INDEF

imrep mask.imh[1:1,*] 0 lower=INDEF upper=INDEF

imrep mask.imh[1021:1024,*] 0 lower=INDEF upper=INDEF

#

# now check the historgram and change the limits if needed.

#

imhist mask.imh z1=0.4 z2=1.4 nbins=100

displ mask.imh 1 zs- zr- z1=0.5 z2=1.5

mask2.cl

#

# good pix are 0, bad are 1 for IRAF mask

# the values 0.65 and 1.25 need to be checked on the histogram

# each time you make the mask.

#

real lll,uuu

real hist1,hist2,hist3,xjunk,histsum,nax1,nax2,npixx,ratio

lll = 0.75

uuu = 1.19

#

imhist('mask',z1=lll,z2=uuu,list+,nbin=1) | scan(xjunk,hist1)

imhist('mask',z1=INDEF,z2=lll,list+,nbin=1) | scan(xjunk,hist2)

imhist('mask',z1=uuu,z2=INDEF,list+,nbin=1) | scan(xjunk,hist3)

histsum= hist1+hist2+hist3

hsel('mask','naxis1','yes') | scan(nax1)

hsel('mask','naxis2','yes') | scan(nax2)

npixx=nax1*nax2

ratio=(hist2+hist3)/npixx

printf("Fraction rejected=%9.3f\n",ratio)

#

imhist('mask',z1=lll,z2=uuu,list+,nbin=1)

imdel temp.imh

imcopy mask temp

displ mask 1

imrep("mask", lower=INDEF, upper=lll, val=-1 )

imrep("mask", lower=uuu, upper=INDEF, val=-1)

imrep("mask", lower=lll, upper=uuu, val=0)

imar mask * mask mask

imcopy mask.imh mask.pl

# make DAOPHOT mask where bad pix are 0 and good are 1

imrename mask.imh maskdao

imar maskdao - 1 maskdao

imar maskdao * -1 maskdao

#

displ mask.pl 2 zs- zr- z1=0 z2=1

You can check frames 1,2 to see if the mask looks good.

Make inj,inh,ink files for all the SN data. These will be used to make

the sky.

del in*

files r*.imh > in1

hsel @in1 $I,title yes | grep "X" - | fields - 1 > inx

hsel @in1 $I,title yes | grep "cn" - | fields - 1 > incn

hsel @in1 $I,title yes | grep "cz" - | fields - 1 > incz

hsel @in1 $I,title yes | grep "bt" - | fields - 1 > inbt

hsel @in1 $I,title yes | grep "du" - | fields - 1 > indu

hsel @in1 $I,title yes | grep "el" - | fields - 1 > inel

Now grep it to separate out the different SNe

hsel @inx $I,irfltid yes | grep "J" - | fields - 1 > inxj

hsel @inx $I,irfltid yes | grep "H" - | fields - 1 > inxh

hsel @inx $I,irfltid yes | grep "K" - | fields - 1 > inxk

hsel @incn $I,irfltid yes | grep "J" - | fields - 1 > incnj

hsel @incn $I,irfltid yes | grep "H" - | fields - 1 > incnh

hsel @incn $I,irfltid yes | grep "K" - | fields - 1 > incnk

hsel @incz $I,irfltid yes | grep "J" - | fields - 1 > inczj

hsel @incz $I,irfltid yes | grep "H" - | fields - 1 > inczh

hsel @incz $I,irfltid yes | grep "K" - | fields - 1 > inczk

hsel @inbt $I,irfltid yes | grep "J" - | fields - 1 > inbtj

hsel @inbt $I,irfltid yes | grep "H" - | fields - 1 > inbth

hsel @inbt $I,irfltid yes | grep "K" - | fields - 1 > inbtk

hsel @indu $I,irfltid yes | grep "J" - | fields - 1 > induj

hsel @indu $I,irfltid yes | grep "H" - | fields - 1 > induh

hsel @indu $I,irfltid yes | grep "K" - | fields - 1 > induk

hsel @inel $I,irfltid yes | grep "J" - | fields - 1 > inelj

hsel @inel $I,irfltid yes | grep "H" - | fields - 1 > inelh

hsel @inel $I,irfltid yes | grep "K" - | fields - 1 > inelk

Run irsky. MAKE SURE THAT THE INSUF AND OUTSUF ARE CORRECTLY SET OR YOU WILL OVERWRITE YOUR DATA:

irsky:

| images = | "@inh" | input images |

| (statsec = | "[25:600,25:1000]") | Stat sec |

| (sigma = | 2.5) | sigma clip for stats |

| (niter = | 9) | interactions for sigma clipping |

| (irfltid = | "IRFLTID") | keyword for filter |

| (outimage = | "Sky") | Output root for sky image |

| (nlow = | 0) | number of low pixels to reject |

| (nhigh = | 1) | number of high pixels to reject |

| (combine = | "median") | type of combine function |

| (reject = | "minmax") | type of rejection |

| (insuf = | "r") | Root suffixfor input image |

| (outsuf = | "s") | Root suffix fro output image |

| (imglist1 = | "t1.jnk" | |

| (mode = | "al") |

You may have to play with the nhigh to reduce the print-through.

This program outputs a file called sub.cl which you run to do the sky subtractions.

cl < sub.cl

This is now sky subtracted data. All the data should be near 0 sky. You can check this with getsky.

task getsky = home$scripts/getsky.cl

Look at the final subtractions to see if the sky subtracted well, and there is not a large flux "hole" in the image center due to print through of the median combine of the images.

After the sky subtraction is done, rename the SkyJ, etc. images so you don't overwrite them.

imren SkyJ SkyJcn

Do the sky subtraction on JHK. For JH, I usually used a single sky averaged over both dithers. For K and sometimes JH, do each dither separately. You have to look at the sky to make the decision as to whether to separate out the dithers. Make two files, - ink1 and ink2 as:

dithsep @ink

etc.

This will do the following:

hsel @ink $I,dither yes | sort col=2 > inink1

emacs ink1 ink2

irsky @ink1

cl < sub.cl

imren SkyK SkyK1 <== VERY IMPORTANT TO DO !!!

irsky @ink2

cl < sub.cl

imren SkyK SkyK2

hsel @inh $I,dither yes | sort col=2 > inh1

emacs inh1 inh2

irsky @inh1

cl < sub.cl

imren SkyH SkyH1 <== VERY IMPORTANT TO DO !!!

irsky @inh2

cl < sub.cl

imren SkyH SkyH2

The data are now sky subtracted. Do ALL the data before the next step.

For the final mosaic, you should set the bad pixels to a large number. Since saturation is 10000, 20000 ADU is a good value.

imar s*.imh / maskdao s*.imh divzero=20000

If you want to fix the bad pixels for pretty pictures:

fixpix s???.imh mask=mask.pl

The data will be [BZF] now.

The final mosaic is a piece of art, and I don't have the killer technique yet. The following does an excellent job if the night is photometric. The main problem we face is to detect and remove the warm pixels/cr's without removing flux from the stars.

The first step is to shift the data. If the seeing is >3 pix or so, use integer shifts.

We will now operate on the s*.imh images. Run:

chsuf in1 sufin="r" sufout="s"

etc.

rimexam.iterations = 1

yalocenter @inj

!$myprog/prog48 junk.dat

cl < shift.cl

displ frame=1 zs- zr- z1=-10 z2=200 image=temp10

displ frame=2 zs- zr- z1=-10 z2=200 image=temp11

This will produce integer shift image called temp*.imh. You can modify prog48 if you want real-valued shifts but I would not recommend it.

The final combine can be made as follows.

Use stsdas.hst_calib.wfpc package and run noisemodel on your data. Converge on the read noise and scalenoise. You will see a plot with a bunch of points at the lower left and two paralllel sequences to the upper right. Fudge the read noise until it passes thought the lower left. Then fugde the scalenoise (in units of percent) until it passes through the LOWER sequence. These are the stars. The upper sequence are warm pixels.

stsdas

hst

wfpc

noisemodel s111 xb=10 yb=10

Input these parameter to imcomb, remembering to convert from percent to fraction. For instance, I found:

imdel t.imh,t.pl

# H

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-50 hth=15000 \\

# gain=6.5 rdn=50 snoise=0.35 lsig=4 hsig=4

# K

imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-200 hth=15000 \\

gain=6.5 rdn=95 snoise=0.30 lsig=4 hsig=4

# J

imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-50 hth=10000 \\

gain=6.5 rdn=21 snoise=0.3 lsig=4 hsig=4

displ t.imh 1 zs- zr- z1=-20 z2=100

displ t.pl 2

Then

imren t.imh SN2001cnj.imh

imren t.pl SN2001cnj.pl

When the detector had lots of warm pixels, I used

imdel t.imh,t.pl

# H

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-50 hth=10000 \\

# gain=6.5 rdn=72 snoise=0.60 lsig=6 hsig=5

# K

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-500 hth=10000 \\

# gain=6.5 rdn=140 snoise=0.55 lsig=7 hsig=6

# J

imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-50 hth=10000 \\

gain=6.5 rdn=55 snoise=0.70 lsig=7 hsig=5

displ t.imh 1 zs- zr- z1=-20 z2=100

displ t.pl 2

If the night was not photometric, we have to estimate a scale factor. I have not figured this out yet but it will require scaling on the galaxy or some stars, but doing the calculation throwing out bad pixels.

If it is not photometric, I find that I have to change the clipping from sig=4 to sig=6-8.

We need to get the psf photometry done quickly. So let's not waste too much time getting the best psfs.

Here is an outline of the data reduction.

1. Copy over the *.opt files:

copy /uw50/nick/daophot/optfiles/yalo/ir/*.opt .

copy /uw50/nick/daophot/optfiles/yalo/ir/jhk2.clb .

copy /uw50/nick/daophot/optfiles/yalo/ir/ir2.lib .

We will solve for

[J,J-H]

[H,J-H]

[K,J-K]

because we often don't have K. I don't have color terms for J-H yet, so we will set them to 0 right now.

daophot.opt:

| Read noise | = | 2.1 | |

| Gain | = | 6.5 | |

| FWHM | = | 5.5 | |

| Fitting radius | = | 5.5 | |

| PSF radius | = | 4 | |

| Analytic model PSF | = | 3 | |

| Variable PSF | = | 0 | |

| Extra PSF cleaning passes | = | 5 | |

| High good datum | = | 10000 | |

| Watch progess | = | -2 | |

| Thershold | = | 7 |

allstar.opt:

| Fitting Radius | = | 4.5 | |

| IS (Inner sky radius)) | = | 2 | |

| OS (Outer sky radius) | = | 25 | |

| Redetermine Centroids | = | 1 |

photo.opt:

| A1 | = | 7.0000 | |

| A2 | = | 7.5195 | |

| A3 | = | 8.2987 | |

| A4 | = | 9.3377 | |

| A5 | = | 10.6364 | |

| A6 | = | 12.1948 | |

| A7 | = | 14.0130 | |

| A8 | = | 16.0909 | |

| A9 | = | 18.4286 | |

| AA | = | 21.0260 | |

| AB | = | 23.8831 | |

| AC | = | 27.0000 | |

| IS | = | 30.0000 | |

| OS | = | 35.0000 |

3. To create the *.inf file.

mv in* old

del junk.dat

files SN*.imh > in1

hsel @in1 $I,IRFLTID,utmiddle,airmass,exptime,hjd,title,ha yes > junk.dat

!$myprog/prog3a junk.dat

0

name

/uw50/nick/daophot/irafstuff/filters_yalo_ir.dat

4. Measure the FWHM as:

del junk.dat

yaloshift @in1

etc.

Then run

!$myprog/prog39 junk.dat

You also have to add in the read noise and gain. Run nstat on the data to get the read noise and hsel to get the coadds+ncombine

hsel @in1 $I,ncoadds,ncombine yes | fields - 2,3 \\

| filec STDIN "$1;$2;6.5*$1*$2" fo="%6d%6d%6d"

nstat @in1 statsec=1000:1100,1000:1100 iter+ niter=2 sig=4

Then enter this into the fwhm.dat. Since we have averaged a lot of data together, the gain is 6.5*N where N is the number of frames. Let us assume that N is about n*m where n is the number of coadds and m is the number of frames.

input into fwhm.dat

name

fwhm psf_rad var gain ron

fwhm.dat:

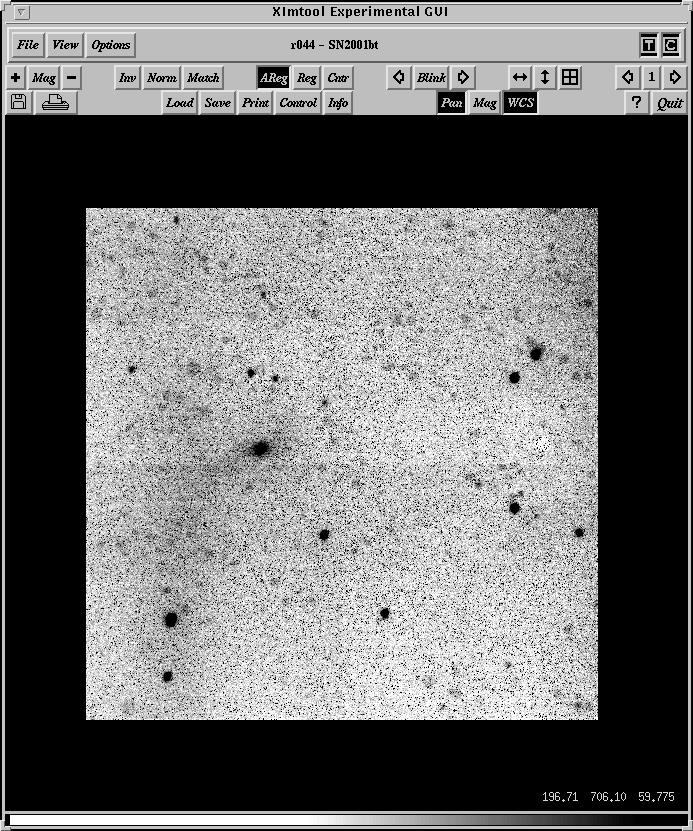

sn2001bt_h.imh

4.62 15 1 35 3.34

sn2001bt_j.imh

4.51 15 1 35 1.72

sn2001bt_k.imh

4.12 15 1 35 4.36

sn2001cn_h.imh

4.47 15 1 65 2.26

sn2001cn_j.imh

4.31 15 1 65 1.01

Note this program forces a var=1. If there are too few stars, use var=0. THIS IS IMPORTANT!!

5. For SN data, run BYALOIR and enter in the data from fwhm.dat.

Note that BPASSIR and BYALOIR takes 5 parameters: fwhm, psf size, variation, gain, and readnoise. It only solves for var=-1 in this pass. I used a threshold of 10 and var=1. If there are only a few stars in the frame, use var=0. It takes about 4min per frame to run.

This program runs BPASS1, prog11a, and FINAL2. If needed, clean up the psf with.

!$myprog/prog11a sn2001bt_h 0.1

or use dals to edit the lst stars.

6. Add in the supernova if it was missed by BYALOIR with addals. Run FINAL2 again.

If the SN is too faint, you may want to run ALLFRAME. To do this, make the *.mch file (below), run DAOMASTER again to make a *.mag file, and renumber the stars with DAOPHOT. Then run BALLFRAME. After ALLFRAME, you need to run the following to cleanup the data (turn *.alf to *.als, etc).

!$myprog/prog45 SN2001bth

!source SN2001bth

!/uw50/nick/daophot/perl/daomaster.pl SN2001bth

7. Make the *.mch file for each SN. Use yalocenter to id a star into

junk.dat and then run

yalocen SN*bt?.imh

!$myprog/prog52b junk.dat als

This makes the correct *.mch file in DAOMATCH format.

Run DAOMASTER as:

/uw50/nick/daophot/perl/daomaster.pl

8. Make the *.fet file. Use the same star numbers as the optical images.

IMPORTANT - ONLY CHOSE STARS THAT ARE NEAR THE SN AND WERE ON ALL THE FRAMES. DO NOT CHOSE STARS NEAR THE EDGE OR BEYOND COL 600. LOOK AT THE *.PL FILE TO MAKE SURE!

The data are now ready for REDUCE. Copy the *net files and run REDUCE.

cp /uw52/nick/sn/sn01cz/ir/SN2001cz.net .

cp /uw52/nick/sn/sn01bt/ir/SN2001bt.net .

cp /uw52/nick/sn/sn01cn/ir/SN2001cn.net .

cp /uw52/nick/sn/sn01du/ir/SN2001du.net .

cp /uw52/nick/sn/sn01x/ir/SN2001x.net .

reduce

i20010710

SN2001czh

E

SN2001cz.net

SN2001czh

7

1 1 1

etc.

9. If you want to make a *.net file for the photometry, do the following:

a. Find a night which looks photometric. If there were standards taken, great! If not, we can still fake it.

b. I assume the data are reduced through *.fet, *.mch, and *.als. We now run DAOGROW. Make a *.lis file.

ls -1 *ap >> i20010618.lis

c. Now run COLLECT. You can use:

!$myprog/prog43 SN2001czh

to speed things up.

d. Now, if you have real standards, you can run CCDSTD with just the A0,B0, and C0 coeffs missing.

Use this updated *.clb file.

d. If you don't have standards, make sure you have a *.clb file that has the name of the night, and the right set of filters.

If you have jhk data, use jhk1.clb. If you have only jh data, use jh.clb. Rename *.clb to something like

mv jhk2.clb i20010618.clb

e. Now run CCDAVE (not CCDSTD!) to get the *.net file.

This will have the prelimiary photometry. I called it sn2001cn_ir.net. Put the *.net file in the appropriate directory for future use. Also put the *.fet and the master image there so we can remember what we did!

f. You can check the field using:

!$myprog/prog55b /uw52/nick/sn/sn01cn/ir/SN2001cn.net i20010710.net

at columns 26, 44, 62

DONE!

SN2000cx data reductions

Data taken June-Sept 2001

April 2001

Gain 6.6e-/ADU

read noise 14.1e-

0. Copy all the images from fits to imh.

cpimh ir*.fits del+

1. The YALO FITS headers have some features which I change.

equinox ==> epoch

observat ==> "ctio"

and move the jd to JD-OLD

I run the script "yalohead" to convert the FITS headers into something

more standard for IRAF.

yalohead ir*.imh

setjd ir*.imh date="UTDATE" time="UT" exposure="EXPTIME" epoch="EQUINOX"

setairmass ir*.imh

2. I have written a small task to put a dither parameter in the header.

If you have standards that were taken with dithers, you may want to use this.

Check the tilt parameters first as:

hsel *.imh $I,tilt1,tilt2,tilt3 yes

dtilt *.imh

dtilt:

| images = | "a*.imh" | input images | |

| (dither = | 40 | Tilt step: 10,20,20, etc | |

| (tilt1 = | 1320 | Tilt position 1 | |

| (tilt2 = | 2225 | Tilt position 2 | |

| (tilt3 = | 1860 | Tilt position 3 | |

| (imglist = | "tmp$tmp.562ga") | ||

| (mode = | "ql" |

The IR detector has a numerical bias of 400 units. On top of that, the dark frame at the same exptime as an object frame has warm pixels that are similar to biases. The biases we will use are in order of preference:

1. An averaged dark taken at the same time as the object frame.

Check to see if the darks look okay. Sometimes the first one is bad.

zerocomb nickdark.????.imh out=irdark45 comb=med rej=minmax nlow=1 nhigh=1

displ irdark45 1

2. The DOME_OFF frame

Note the the K DOME_OFF actually has some light on it. You must do a getsky on this image, see what the sky value is, and subtract a constant to bring it to 400.

imar ir000323.K_OFF - 460 ir000323.K_OFF

3. A numerical bias frame with 400. in all the pixels. If you have to make a numerical bias, then:

imcopy ir991121.flatj zero400

imrep zero400 400. lower=INDEF upper=INDEF

hedit zero400 IMAGETYP zero up+

hedit zero400 title "Numerical bias of 400" up+

4 IMPORTANT! Whatever bias you are using, you must declare the image as a ZERO image.

hedit irdark45 IMAGETYP zero up+ ver-

1. The flats are calculated as DOME_ON-DOME_OFF.

YOU MUST EDIT THE HEADER OF YOUR FLAT FRAMES TO SHOW THAT THESE FLATS ARE ZERO-CORRECTED. (They are already zero-corrected because they were calculated as DOME_ON-DOME_OFF). IF YOU DON'T DO THIS, THE FLATS WILL BE ZERO-CORRECTED BY CCDPR, AND THIS IS VERY WRONG!

hedit irflat?.????.imh ZEROCOR "Corrected by DOME_OFF" add+

2. The flats may have 0 value pixels, which will cause the ccdpr to crash.

The low pixels should be replaced by a large number (here I chose saturation) so that in the division, they will be close to 0. You may want to run the flats through imrep as:

imreplace irflat?.????.imh 15000 lower=INDEF upper=1

3. Now we flatten the data

The data are now [ZF].

ccdr:

| (pixeltype = | "real real") | Output and calculation pixel datatypes |

| (verbose = | yes) | Print log information to the standard output? |

| (logfile = | "logfile") | Text log file |

| (plotfile = | "") | Log metacode plot file |

| (backup = | "") | Backup directory or prefiz |

| (instrument = | "myiraf$/yalo_ir.dat") | CCD instrument file |

| (ssfile = | "myiraf$/yalo_ir.sub") | Subset translation file |

| (graphics = | "stdgraph") | Interactive graphics output device |

| (cursor = | "") | Graphics cursor input |

| (version = | "2:October 1987") | |

| (mode = | "") | |

| ($nargs = | 0) |

ccdpr:

| images = | "a*.imh" | List od CCD images to correct |

| (output = | "") | List of output CCD images |

| (ccdtype = | "") | CCD image type to correct |

| (max_cache = | 0) | Maximum image caching memory (in Mbytes) |

| (noproc = | no) | List processing steps only?\n |

| (fixpix = | no) | Fix bad CCD lines and columns? |

| (overscan = | no) | Apply overscan strip correction? |

| (trim = | no) | Trim the image |

| (zerocor = | yes) | Apply zero level correction? |

| (darkcor = | no) | Apply dark count correction? |

| (flatcor = | no) | Apply flat field correction? |

| (illumcor = | no) | Apply illumination correction? |

| (fringecor = | no) | Apply fringe correction? |

| (readcor = | no) | Convert zero level image to readout correction? |

| (scancor = | no) | Convert flat field image to scan correction?\n |

| (readaxis = | "line") | Read out axis (column|line) |

| (fixfile = | "") | File describing the bad lines and columns |

| (biassec = | "") | Overscan strip image section |

| (trimsec = | "") | Trim data section |

| (zero = | "irdark45") | Zero level calibration image |

| (dark = | "") | Dark count calibration image |

| (flat = | "irflat?.imh") | Flat field images |

| (illum = | "") | Illumination correction images |

| (fringe = | "") | Fringe correction images |

| (minreplace = | 1.) | Minimum flat field value |

| (scantype = | "shortscan") | Scan type (shortscan|longscan) |

| (nscan = | 1) | Number of short scan lines\n |

| (interactive = | yes) | Fit overscan interactively? |

| (function = | "legendre") | Fitting function |

| (order = | 4) | Number of polynomial terms or spline pieces |

| (sample = | "*") | Sample points to fit |

| (naverage = | 1) | Number of sample points to combine |

| (niterate = | 3 | Number of rejection iterations |

| (low_reject = | 2.5) | Low sigma rejection factor |

| (high_reject = | 2.5 | High sigma rejection factor |

| (grow = | 0.) | Rejection growing radius |

| (mode = | "ql") |

myiraf$/yalo_ir.dat:

exptime exptime

imagetyp imagetyp

subset IRFLTID

| OBJECT | object | |

| DARK | zero | |

| FLAT | flat | |

| BIAS | zero | |

| MASK | other |

myiraf$/yalo_ir.sub

| 'H' | H |

| 'J' | J |

| 'K' | K |

You can rename the reduced data to something simple, like the following. If you do this command, make sure you don't make typos!

imren ir000729.0*.imh %ir000729.0%r%*.imh

The r*.imh data are [ZF]

Make a mask image as follows. Here we use the dome flats corrected for DOME_OFF for the mask. Note that there are very many warm pixels with the detector and about 10% of these change flux during the night. If the warm pixels change flux between the ON and OFF images, they will be flagged as bad pixels here.

The philosophy of the masks is that all pixels in a normalize image that are less than some value like 0.7 are probably bad, and will be marked as a bad pixel.

mask1.cl:

# to make the mask, use imhist and look for the limits

# first flatten the flats and remove the edge defects

#

string img

img = "ir000729.flath"

#

imdel("temp*.imh", >>& "dev$null")

imdel("mask.imh,maskdao.imh,mask.pl", >>& "dev$null")

imtrans(img,"temp1")

fmed("temp1","temp2", xwin=201, ywin=1, boundary="wrap")

imtrans("temp2","temp3")

imar(img, "/", "temp3", "mask")

imdel("temp*.imh", >>& "dev$null")

imrep mask[*,1:10] 0 lower=INDEF upper=INDEF

imrep mask[*,1020:1024] 0 lower=INDEF upper=INDEF

imrep mask[1:1,*] 0 lower=INDEF upper=INDEF

imrep mask[1021:1024,*] 0 lower=INDEF upper=INDEF

#

# now check the historgram and change the limits if needed.

#

imhist mask z1=0.4 z2=1.4 nbins=100

displ mask 1 zs- zr- z1=0.4 z2=1.4

mask2.cl

#

# good pix are 0, bad are 1 for IRAF mask

# the values 0.65 and 1.25 need to be checked on the histogram

# each time you make the mask.

#

real lll,uuu

real hist1,hist2,hist3,xjunk,histsum,nax1,nax2,npixx,ratio

lll = 0.7

uuu = 1.19

#

imhist('mask',z1=lll,z2=uuu,list+,nbin=1) | scan(xjunk,hist1)

imhist('mask',z1=INDEF,z2=lll,list+,nbin=1) | scan(xjunk,hist2)

imhist('mask',z1=uuu,z2=INDEF,list+,nbin=1) | scan(xjunk,hist3)

histsum= hist1+hist2+hist3

hsel('mask','naxis1','yes') | scan(nax1)

hsel('mask','naxis2','yes') | scan(nax2)

npixx=nax1*nax2

ratio=(hist2+hist3)/npixx

printf("Fraction rejected=%9.3f\n",ratio)

#

imhist('mask',z1=lll,z2=uuu,list+,nbin=1)

imdel("temp*.imh")

imcopy mask temp

displ mask 1 zs- zr- z1=0.4 z2=1.4

imrep("mask", lower=INDEF, upper=lll, val=-1 )

imrep("mask", lower=uuu, upper=INDEF, val=-1)

imrep("mask", lower=lll, upper=uuu, val=0)

imar mask * mask mask

imcopy mask.imh mask.pl

# make DAOPHOT mask where bad pix are 0 and good are 1

imrename mask.imh maskdao

imar maskdao - 1 maskdao

imar maskdao * -1 maskdao

#

displ mask.pl 2 zs- zr- z1=0 z2=1

You can check frames 1,2 to see if the mask looks good.

1. Make a directory called "vig" and copy the K data from r*.imh which is [ZF] data.

2. Fixpix r*.imh using

fixpix r*.imh mask=mask.pl

3. Edit out the star and galaxy.

We edit the stars out with the "b" aperture and an radius of 18. Edit out the stars first, then change the radius to 40 by typing ":rad 40", and then edit out the galaxy. You may have to use the "c" key instead if the galaxy is sitting on a large gradient. In this case you mark the lower left and upper right corners. The rectangle should be big and long in the column direction!

imedit r264 a264 aper="circular" radius=15 buf=10 wid=10

imedit r265 a265 aper="circular" radius=15 buf=10 wid=10

etc.

2. Divide the images by the first image in dither ==> b???.imh

imar a264 / a264 b264

imar a265 / a265 b265

etc.

3. You must make the b???.imh roughly equal to 1 before doing the filtering in the next steps.

Pick a statsec on the images where there is no bright star or galaxy. Figure out the minimum and maximum vignetting in the divided images. For dither=30, zlo=0.9 and zhi=1.1. These are very important! For larger dithers, the numbers must be more like 0.8 and 1.2. Run;

task normrat = home$scripts/normrat.cl

normrat b*.imh

normrat:

| images = | "b*.imh" | input images |

| (statsec = | "[50:600,25:1000]") | Stat sec |

| (sigma = | 2.5) | sigma clip for stats |

| (niter = | 10) | interations for sigma clipping |

| (pre1 = | "b") | input prefix |

| (pre2 = | "c") | output prefix |

| (zlo = | 0.8) | Low cutoff for fmed |

| (zhi = | 1.2) | High cutoff for fmed |

| (xwin = | 1) | xwin for fmed |

| (ywin = | 351) | ywin for fmed |

| (outfile1 = | "temp1.cl") | output file for norm script1 |

| (outfile2 = | "temp2.cl") | output file for norm script2 |

| (outfile3 = | "temp3.cl") | output file for norm script3 |

| (imglist1 = | "tmp$tmp.8179fa") | |

| (mode = | "ql") |

This will produce 3 scripts, temp1.cl, temp2.cl, and temp3.cl.

To normalize the b???.imh, do

cl < temp1.cl

You can run getsky to check that the norm looks good.

NOTE THAT THE FIRST B???.IMH IMAGE IS 1.0 AND CAN BE IGNORED.

To produce the medianed flat, run

cl < temp2.cl

which will output c*.imh images.

5. Run prog50 to force 1.0 on the good part of the chip.

Measure xlo and xhi for the good column limits. xlo is where the vignetting starts on the first row, and xhi is where the vignetting starts on the last row. All pixels to the left of this will be set to 1.0, and all pixels to the right will have the value as in the c???.imh image. The script temp3.cl has the basic commands, but YOU MUST EDIT THE XLO AND

XHI VALUES.

Note that the dithers:

1,4,7

2,6

3,5

should be pretty much the same vignetting.

Find the xlo,xhi:

yalocen c*.imh iraf- zz1=0.9 zz2=1.1

# dither 2

!$myprog/prog50 c265.imh c265.imh 730 640

# dither 3

!$myprog/prog50 c266.imh c266.imh 770 680

# dither 4

!$myprog/prog50 c267.imh c267.imh 750 660

# dither 5

!$myprog/prog50 c268.imh c268.imh 770 680

# dither 6

!$myprog/prog50 c269.imh c269.imh 730 640

# dither 7

!$myprog/prog50 c270.imh c270.imh 750 660

730 640

770 680

750 660

770 680

730 640

750 660

These values really should not be changing!

The c???.imh data now represent dither=x/dither=1 corrections. We need to divide these data into the original r*.imh data in the upper directory. To keep the bookkeepping straight, first lets make an image for dither=1.

imcopy r264 vig01

imrep vig01 1 lower=INDEF upper=INDEF

Now rename the c*.imh data to vig01,vig02, ... vig07. Make sure it looks okay as:

hsel vig*.imh $I,dither yes

6. Now correct the r*.imh data. Go to the upper directory and:

imren vig/vig*.imh .

hsel r*.imh $I,dither yes > in2

Edit in2 as:

imar r250 / vig01 f250

hedit f250 VIGCOR "Corrected for vignetting by vig01" add+ up+

imar r251 / vig02 f251

hedit f251 VIGCOR "Corrected for vignetting by vig02" add+ up+

imar r252 / vig03 f252

hedit f252 VIGCOR "Corrected for vignetting by vig03" add+ up+

imar r253 / vig04 f253

hedit f253 VIGCOR "Corrected for vignetting by vig04" add+ up+

imar r254 / vig05 f254

hedit f254 VIGCOR "Corrected for vignetting by vig05" add+ up+

etc.

Make inj,inh,ink files for all the SN data. These will be used to make the sky.

imdel in*

files f*.imh > in1

hsel @in1 $I,irfltid yes | grep "J" - | fields - 1 > inj

hsel @in1 $I,irfltid yes | grep "H" - | fields - 1 > inh

hsel @in1 $I,irfltid yes | grep "K" - | fields - 1 > ink

Run irsky. MAKE SURE THAT THE INSUF AND OUTSUF ARE CORRECTLY SET.

irsky:

| images = | "@inh" | input images | |

| (statsec = | "[25:600,25:1000]") | Stat sec | |

| (sigma = | 2.5) | sigma clip for stats | |

| (niter = | 9) | interations for sigma clipping | |

| (irfltid = | "IRFLTID") | keyword for filter | |

| (outimage = | "Sky") | Output root for sky image | |

| (nlow = | 0) | number of low pixels to reject | |

| (nhigh = | 1) | number of high pixels to reject | |

| (combine = | "median") | type of combine function | |

| (reject = | "minmax") | type of rejection | |

| ==> | (insuf = | "f") | Root suffix for input image |

| ==> | (outsuf = | "s") | Root suffix for output image |

| (imglist1 = | "t1.jnk") | ||

| (mode = | "al") |

You may have to play with the nhigh to reduce the print-through.

This program outputs a file called sub.cl which you run to do the sky subtractions.

cl < sub.cl

This is now sky subtracted data. All the data should be near 0 sky. You can check this with getsky.

task getsky = home$scripts/getsky.cl

For the final mosaic, you should set the bad pixels to a large number. Since saturation is 12000, 20000ADU is a good value.

imar s*.imh / maskdao s*.imh divzero=20000

If you want to fix the bad pixels for pretty pictures:

fixpix s???.imh mask=mask.pl

The data will be [BZF] now.

The final mosaic is a piece of art, and I don't have the killer technique yet. The following does an excellent job if the night is photometric. The main problem we face is to detect and remove the warm pixels/cr's without removing flux from the stars.

The first step is to shift the data. If the seeing is >3 pix or so, use integer shifts.

We will now operate on the s*.imh images. Run:

!mv inj temp ; sed s/f/s/ temp > inj ; rm temp

!mv inh temp ; sed s/f/s/ temp > inh ; rm temp

!mv ink temp ; sed s/f/s/ temp > ink ; rm temp

del junk.dat

yalocenter @inj

!$myprog/prog48 junk.dat

cl < shift.cl

etc.

This will produce integer shift image called temp*.imh. You can modify prog48 if you want real-valued shifts but I would not recommend it.

The final combine can be made as follows.

Use stsdas.hst_calib.wfpc package and run noisemodel on your data. Converge on the read noise and scalenoise. You will see a plot with a bunch of points at the lower left and two paralllel sequences to the upper right. Fudge the read noise until it passes thought the lower left. Then fugde the scalenoise (in units of percent) until it passes through the LOWER sequence. These are the stars. The upper sequence are warm pixels.

stsdas

hst_calib

wfpc

noisemodel s111 xb=10 yb=10

Input these parameter to imcomb, remembering to convert from percent to fraction. For instance, I found:

imdel t.imh,t.pl

# H

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-100 hth=13000 \\

# gain=6.5 rdn=49 snoise=0.30 lsig=4 hsig=4

# K

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-200 hth=13000 \\

# gain=6.5 rdn=104 snoise=0.30 lsig=4 hsig=4

# J

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-50 hth=13000 \\

# gain=6.5 rdn=22 snoise=0.25 lsig=4 hsig=4

displ t.imh 1 zs- zr- z1=-20 z2=100

displ t.pl 2

When the detector had lots of warm pixels, I usedL

imdel t.imh,t.pl

# H

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-50 hth=13000 \\

# gain=6.5 rdn=72 snoise=0.60 lsig=6 hsig=5

# K

#imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-500 hth=13000 \\

# gain=6.5 rdn=140 snoise=0.55 lsig=7 hsig=6

# J

imcomb temp??.imh t plf=t.pl comb=ave reject=ccd lth=-50 hth=13000 \\

gain=6.5 rdn=55 snoise=0.70 lsig=7 hsig=5

displ t.imh 1 zs- zr- z1=-20 z2=100

displ t.pl 2

Move the t.imh and t.pl to J.imh J.pl etc.

If the night was not photometric, we have to estimate a scale

factor. I have not figured this out yet but it will require scaling on

the galaxy or some stars, but doing the calculation throwing out bad

pixels.

1. Why do the warm pixels come and go through the night? Sometimes I have very clean data and other times 2-3% of the pixels are >1000ADU.

2. Why is the Sky? frame not very flat? I see maybe 1-3% variations in the non-vignetted part of the detector. The sky has beeen divided by the domes, and should be flat. There are not enough data to create a proper sky flat for division, so I am stuck with the present technique. Note that this can produce systematic errors (especially as a function of x) since the slope is ususally in the same direction in x.

The dome white spot is canted about 8deg wrt to the telescope ring. The flat is illuminated from a lamp in the middle of the ring. This can't be a very good illumination and the general slope (and vignetting problem) may be due in part to this.

3. Why does some of the data show a 2% discontinuity across the two detector halves *after* flatfielding? Is this a large variable bias?

/net/andicam/data/observer

To see what data is available, run:

cl < /uw50/nick/daophot/irafcl/yalo/ir/setup

hsel ir*.fits $I,IRFLTID,exptime,ncoadds,title yes

hsel ccd*.fits $I,CCDFLTID,exptime,title yes

| /uu55/reu7/ | ||

| | | ||

| | | ||

| apr17 | ||

| | | ||

| opt---------------------------------- | ||

| | | ||

| | | ||

| ir |

.daophot

setenv i20020416 /uw55/reu7/apr17/ir

alias i20020416 "cd $i20020416

You can also set them up for IRAF as:

loginuser.cl:

set i20020416 = /uw55/reu7/apr17/ir/

copy /uw50/nick/daophot/irafcl/yalo/ir/* .

Create, or point to the uparm$ directory with the IR data information. Here is my file:

setup:

set stdimage = imt2048

set uparm = /uw50/nick/uparm/yaloir/

noao

ctio

nickcl

imred

ccdred

astutil

digi

apphot

artdata

ccdred.instrument = "myiraf$yalo_ir.dat"

ccdred.ssfile = "myiraf$yalo_ir.sub"

loadit.format = "2048"

loadit.statsec = "700:800,700:800"

keep

cpimh ir*.fits,nick*.fits del+

equinox ==> epoch

observat ==> "ctio"

and move the jd to JD-OLD

I run the script "yalohead" to convert the FITS headers into something more standard for IRAF.

yalohead *.imh

The task now does the setjd and the setairmass. If you need to do it by hand, do this:

setjd *.imh date="UTDATE" time="UT" exposure="EXPTIME" epoch="EQUINOX"

setairmass *.imh

The normal observing procedure is to observe the SN at 2 dither positions with a give dither offset, say 40 units (which is 20"). Since there is vignetting as a function of dither, each dither position has its own flat field. The flats have to be taken at exactly the same dither positions. Since it takes a long time to make the flats, we have defaulted to using two dither positions.

In looking over this data, I found that we used two dither positions for the HK images for the SN with value of 20. The flats were taken with at two dither positions with a value of 40! This is not good. In addition, we took the J data at 7 dithers. I remember deciding to do this, because it was the only way to fill up the U time slot.

So basically the data are a mess and I will have to invent yet another way to reduce the data.

Check the tilt parameters first as:

hsel ir*.imh $I,tilt1,tilt2,tilt3,IRFLTID,title yes

hsel nick*.imh $I,tilt1,tilt2,tilt3,IRFLTID,title yes

Now run for the object frames:

dtilt:

| images = | "ir*.imh" | input images | |

| (dither = | 20) | Tilt step: 10,20,30,etc | |

| (tilt1 = | 1320) | Tilt position 1 | |

| (tilt2 = | 2225) | Tilt position 2 | |

| (tilt3 = | 1820) | Tilt position 3 | |

| (imglist = | "tmp$tmp.562ga") | ||

| (mode = | "ql") |

dtilt ir*.imh dither=20

dtilt:

| images = | "nick*.imh" | input images | |

| (dither = | 40) | Tilt step: 10,20,30,etc | |

| (tilt1 = | 1320) | Tilt position 1 | |

| (tilt2 = | 2225) | Tilt position 2 | |

| (tilt3 = | 1860) | Tilt position 3 | |

| (imglist = | "tmp$tmp.562ga") | ||

| (mode = | "ql") |

dtilt ir*.imh dither=20

Yeah, but here is gets messy. The J images have dithers up to 7, and the standards up to 4. To make life simple, we are going to set the J and standard star dithers all to 1, and use only the .0001 flat for these images.

To do this, make files as:

del in1

files ir*.imh > in1

hsel @in1 $I,IRFLTID,title yes | grep "SN" - | fields - 1 > inSN

hsel @in1 $I,IRfltid,title yes | grep "J" - | grep "SN" - | fields - 1 > inSNJ

hsel @in1 $I,IRfltid,title yes | grep "H" - | grep "SN" - | fields - 1 > inSNH

hsel @in1 $I,IRfltid,title yes | grep "K" - | grep "SN" - | fields - 1 > inSNK

hsel ir*.imh $I,IRFLTID,title yes | grep "P9" - | fields - 1 > instand

hedit @inSNJ dither 1 up+ ver-

hedit @instand dither 1 up+ ver-

Remove the junk images.

hsel *.imh $I,title yes | grep "junk" | fields - -1 > injunk

emacs injunk

ccdl @injunk

The IR detector has a numerical bias of 400 units. On top of that, the dark frame at the same exptime as an object frame has warm pixels that are similar to biases. It is very important that we get dark frames using the same integration times as the object frames. That is why we always choose the same intergration times for JHK.

The best dark is an averaged dark taken at the same time as the object frame. Check to see if the darks look okay. Sometimes the first one is bad.

displ nickdark.0001 1 zs- zr- z1=400 z2=425

displ nickdark.0002 2 zs- zr- z1=400 z2=425

mkdir old

imren nickdark.0001 old

hedit nickdark*.imh imagetyp zero up+ ver-

zerocomb nickdark.????.imh out=irdark45 comb=med rej=minmax nlow=1 nhigh=1

displ irdark45 1 zs- zr- z1=400 z2=500

hedit irdark45 IMAGETYP zero up+ ver-

It is a good idea to look at the dark and also do an imhist to see if the number of hot pixels is excessive.

imhist irdark45

imhist irdark45 z1=0 z2=20000 nbin=10 list+

This looks reasonable to me.

| 1000. | 1048361 | |

| 3000. | 72 | |

| 5000. | 51 | |

| 7000. | 56 | |

| 9000. | 20 | |

| 11000. | 9 | |

| 13000. | 7 | |

| 15000. | 0 | |

| 17000. | 0 | |

| 18000. | 0 |

IMPORTANT! Whatever bias you are using, you must declare the image as a ZERO image.

Here we are going to create a flat field for each dither position using the single set of dome images. We will form the flats in the usual manner. We will reduce the data to [ZF] before sky subtraction to remove the vignetting.

1. Form the DOME_ON-DOME_OFF.

First of all, rename the data "irflath.000?.imh,irdarkh.000?.imh" to a subdirectory. We need these names.

imren irflath.000?.imh old

imren irdarkh.000?.imh old

2. Run the following script which will set up the flats correctly for the 2 dither position.